I came into a scenario recently where we need to decommission a cluster from a provider PVDC where that cluster also contains workloads already provisioned by the customer through the VCD tenant portal. In order to do so, we need to migrate a bunch of workloads for an organization from the orgVDC under workload cluster2 (the one to be decommissioned) to the orgVDC under workload cluster1 knowing that both workload cluster1 and cluster2 are part of an elastic PVDC spanning both clusters.

There may be other ways to do the migration but I want to share my simple procedure that i followed which may help others in case they hit the same use case. Always keep it simple !

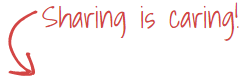

1- Disable Cluster2 resource pool under the PVDC

First of all and as we want to decommission workload cluster2, we need to disable cluster2 as a resource pool under the PVDC so that no new VMs can be provisioned by the customer onto that cluster and thereby introduce new VMs to be migrated in addition to the existing ones.

NOTE: This will not affect the running VMs under cluster2. They will remain running but we are only disabling the capability for the customer to provision new VMs to the cluster through VCD.

Login to the provider portal then navigate to Resources > Cloud Resources > Provider PVDCs. Select your PVDC then navigate to Resource Pools. Select your cluster and click Disable.

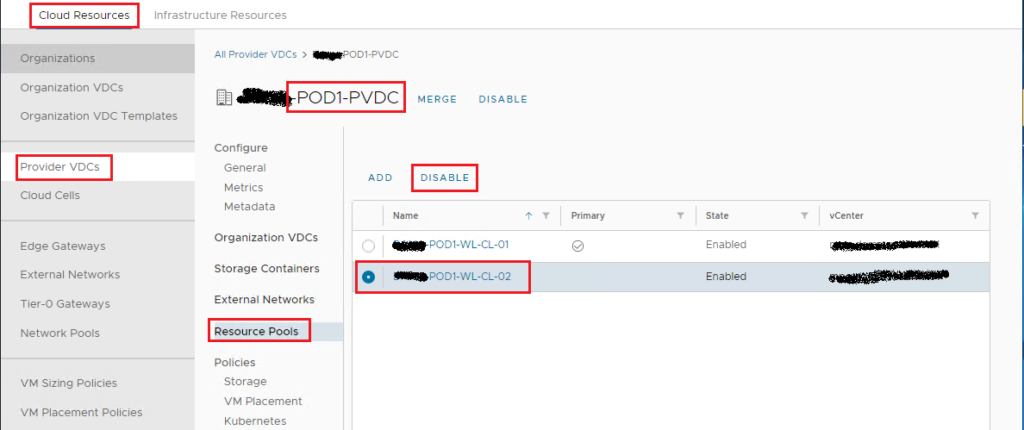

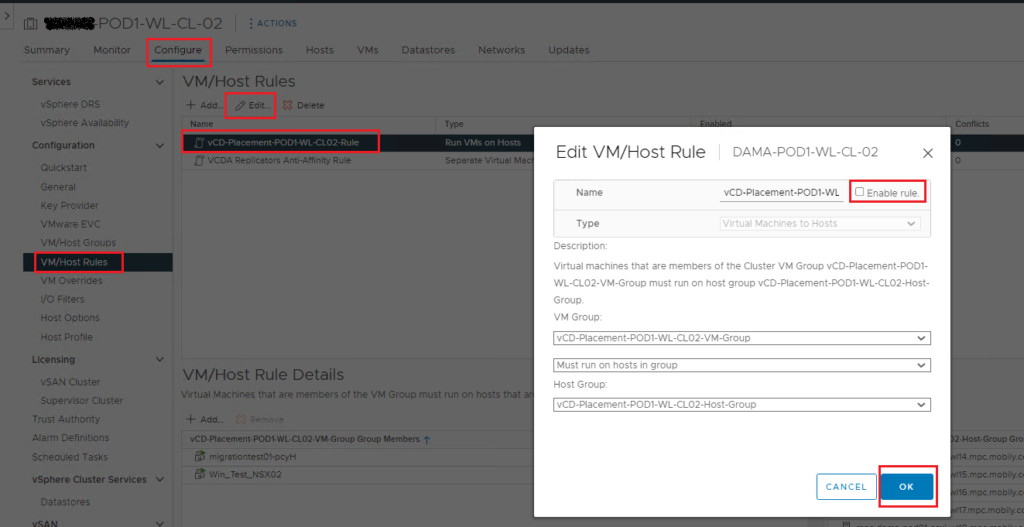

2- Disable the VM/Host rule of cluster2 Placement Policy

Before we can migrate the workloads, we need to disable the VM/Host rule of the placement policy of cluster2. When the rule is enabled, the provisioned VMs under cluster2 can run only on cluster2 ESXi nodes and can’t be migrated to cluster1.

Select your cluster and navigate to Configure > Configuration > VM/Host Rules. Select your placement policy affinity rule, click Edit and disable it.

3- Migrate workloads from cluster2 to cluster1

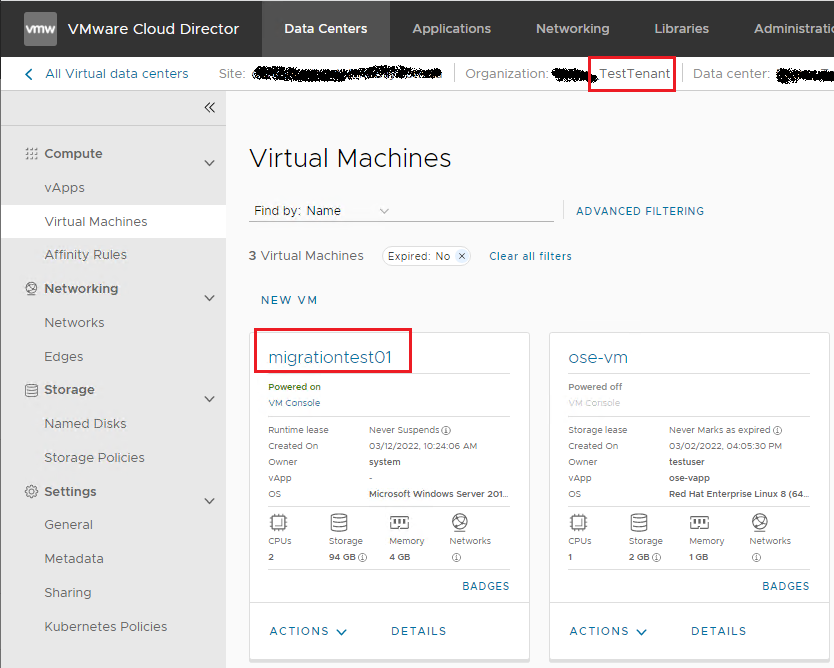

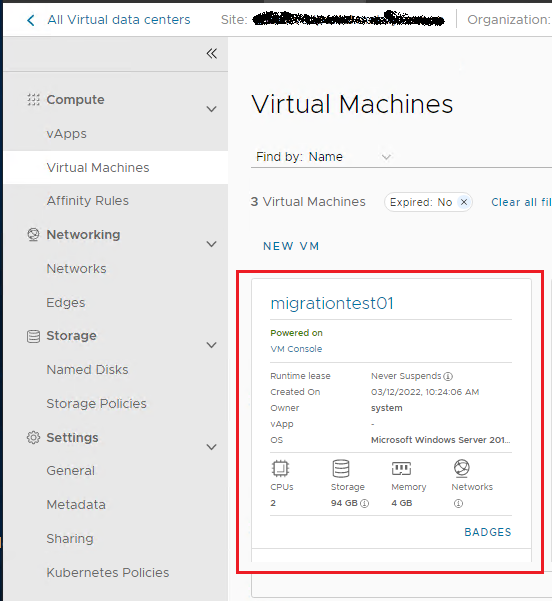

We have a VM provisioned through VCD portal with a selected placement policy to run on workload cluster2. I will illustrate the procedure by migrating this single VM but the same can be followed for the other workloads.

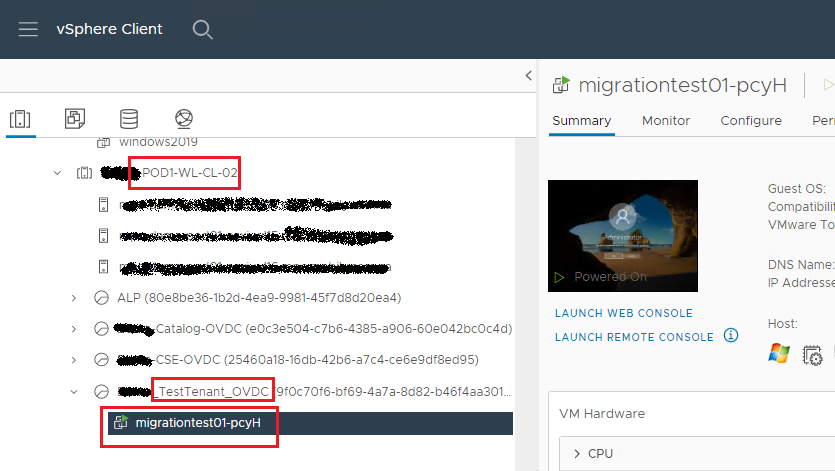

As we have a shared storage spanning both workload clusters, we have only to vMotion the workload (from compute perspective) from cluster2 to cluster1. To do so, right click on the VM and choose Migrate.

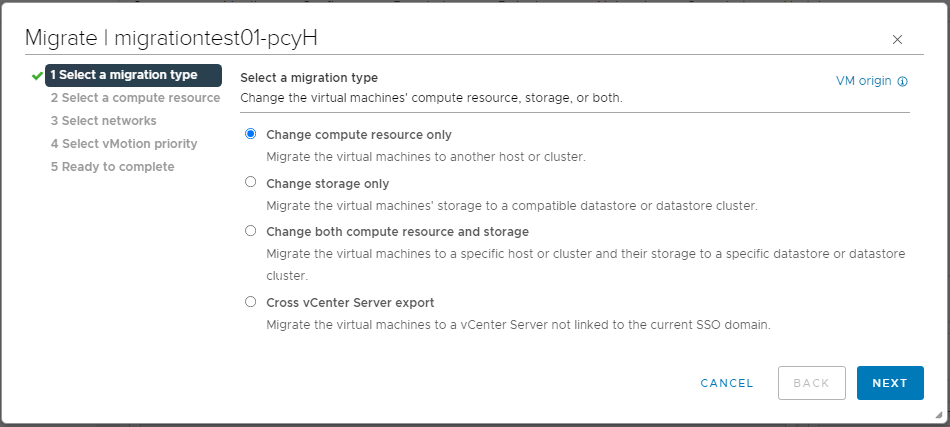

Select Change compute resource only, and click Next.

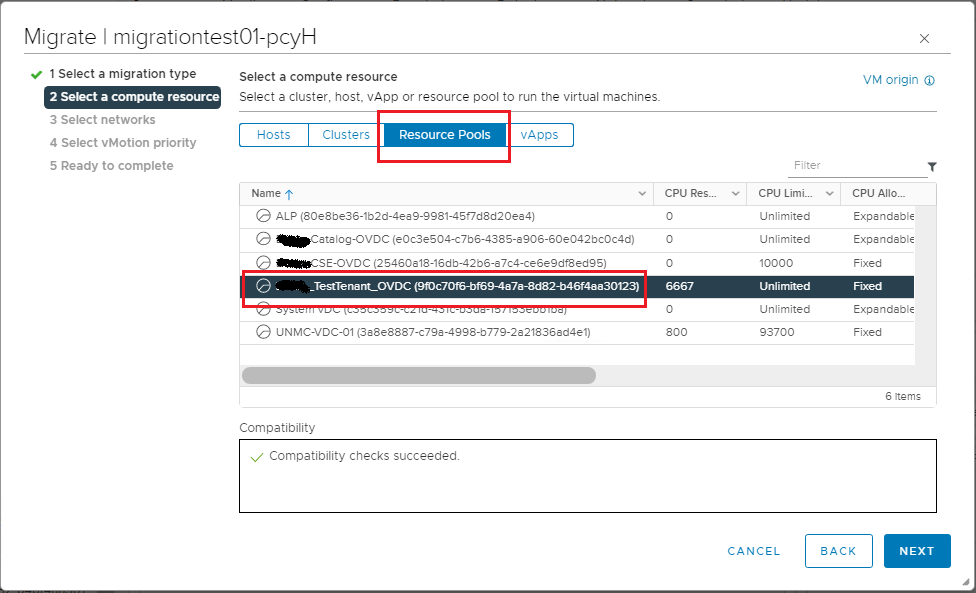

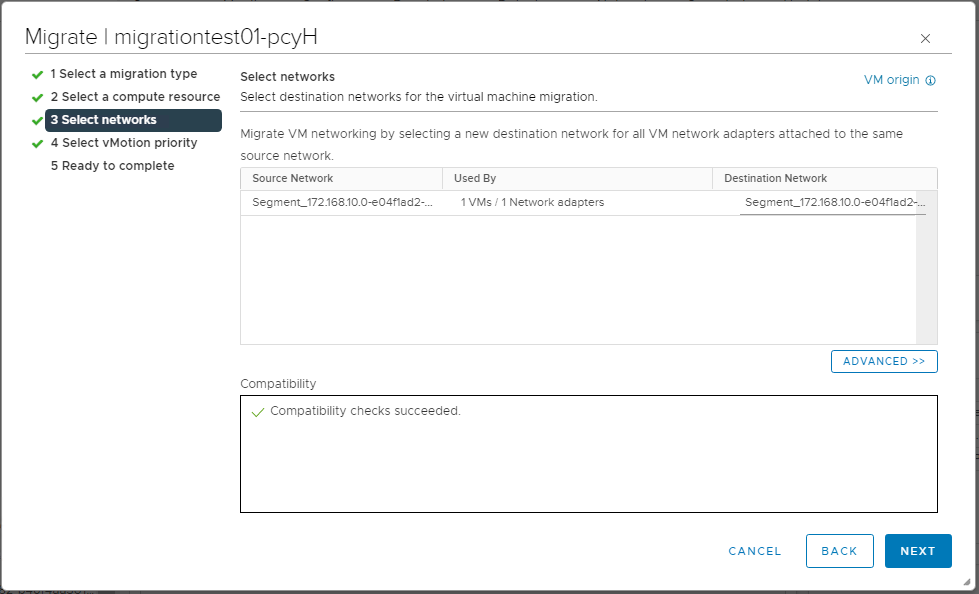

Select Resource Pools, then choose the resource pool for the same OVDC under cluster1.

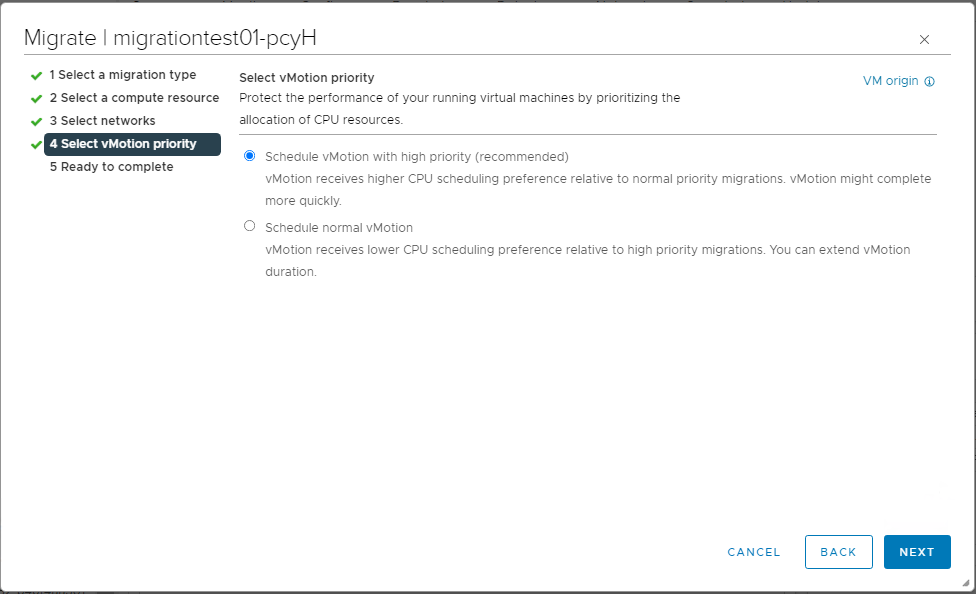

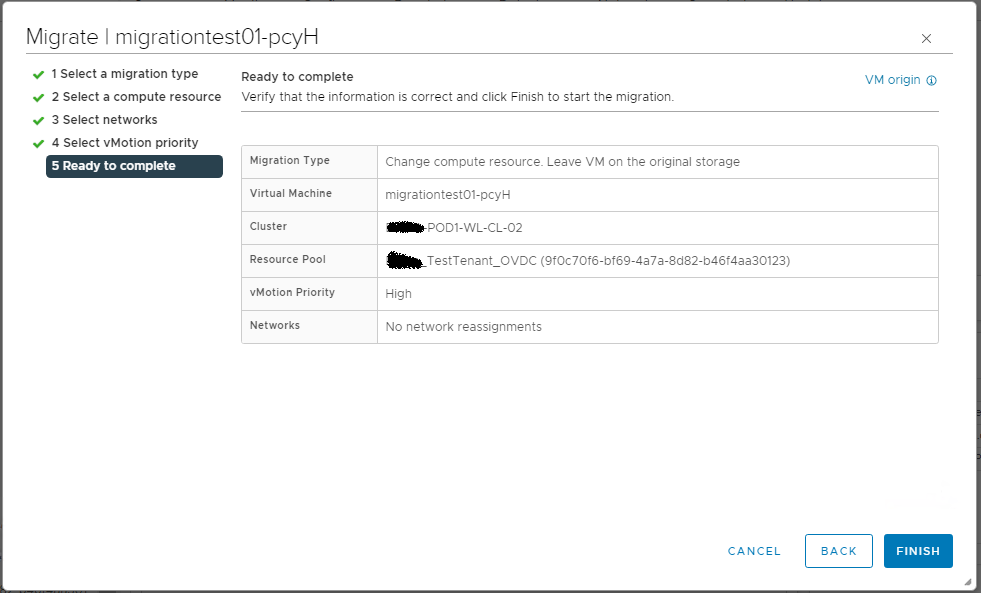

Proceed with the default selections, Next > Next > Finish.

Now the VM is migrated, you can notice that it is running under the tenant OVDC in cluster1.

4- Change the placement policy of the migrated workload

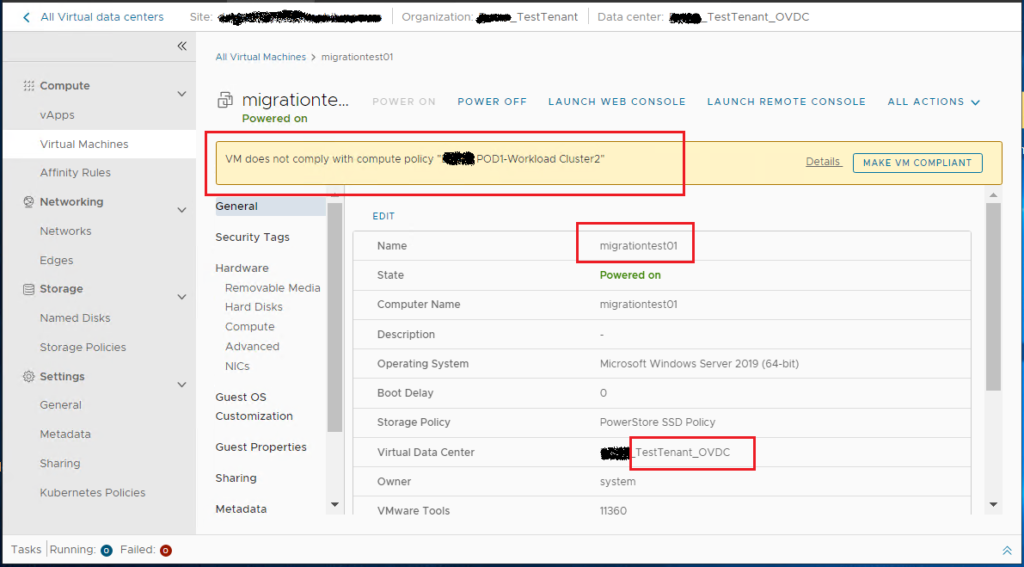

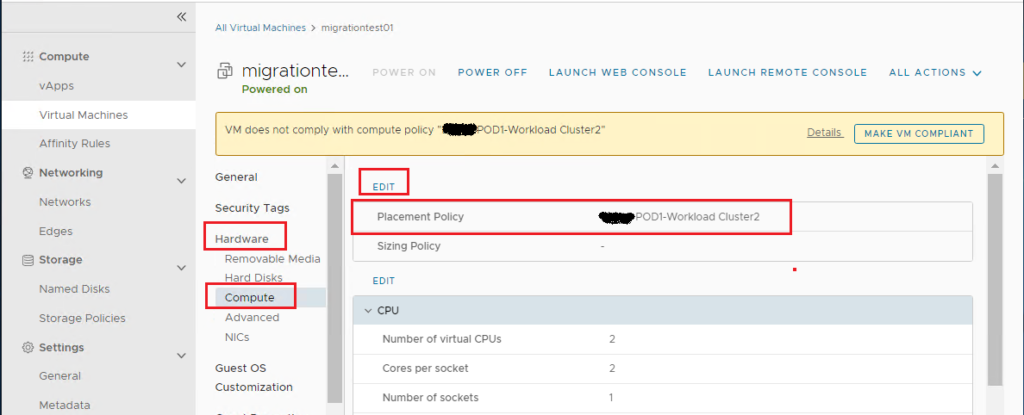

Login to the tenant portal and check the status of the migrated VM. You will notice a warning message stating that the VM is non-compliant with the compute placement policy.

This is expected because the VM was originally provisioned to cluster2 and the placement policy value attached to the VM is still cluster2 policy although the VM is running now under cluster1 from vSphere perspective.

To comply with the compute policy from VCD perspective, we need to change the placement policy of the VM.

Navigate to Data Centers > Virtual Machines. Click on the VM and navigate to Hardware > Compute. You will notice that the placement policy is still pointing to cluster2.

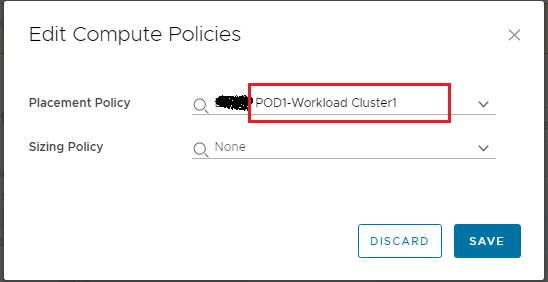

Click on Edit and change the placement policy to point to cluster1. Click Save.

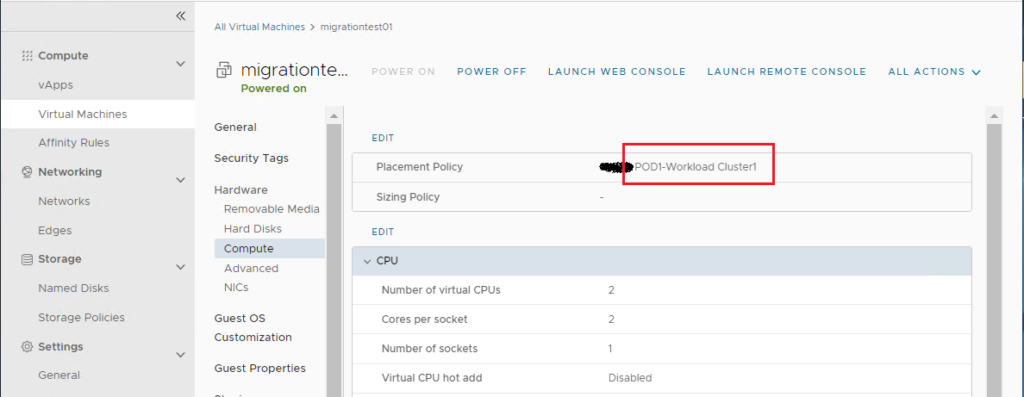

You will notice now that the placement policy is changed and the warning message disappeared meaning that the VM is compliant again.

We are done with the VM migration completely. Notice that this was a live migration without any downtime !

Many thanks for your time reading this post,

I hope it was informative.